Sending Veeam backups to object storage such as Azure Blob has become a hot topic in the last few years. According to Veeam’s quarterly report for the end of 2021, Veeam customers moved over 500 PB of backups just into the top 3 cloud object storage vendors alone.

With many organisations starting to dip their Veeam toes into object storage I thought I would write a bit more about the subject. This blog post is aimed at helping backup administrators who wish to better understand from a Veeam perspective working with public cloud object storage, specifically Azure Blob.

Compared to the traditional NAS or disk-based block storage Object Storage is a completely different shift in how data is stored and accessed. For example, in object storage, it’s intended that files are not modified. In fact, there is no way to modify part of an object’s data and any change requires deletion and replacement of the whole object.

In Azure terminology, objects are stored in a ‘Blob’, which can be thought of as similar to a volume on a disk but far more scalable. Blob storage is a pay-per-use service. Charges are monthly for the amount of data stored, accessing that data, and in the case of cool and archive tiers, a minimum required retention period. In case you haven’t realised, Blob storage is Microsoft’s object storage solution.

There are numerous methods we can utilise to leverage Microsoft Azure Blob with Veeam Backup & Replication. For example, Azure Blob can be used as an Archive Tier target within a SOBR (Scale-Out Backup Repository) for long-term retention of backups, an archive repository for Veeam NAS Backups and some readers may even be familiar with the external repositories function.

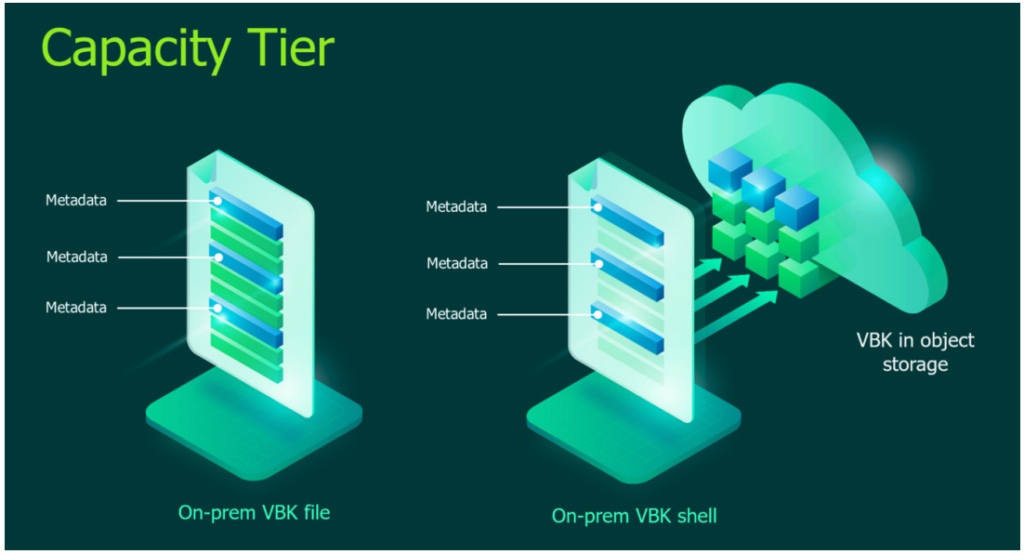

The most popular method is using Blob as a Veeam Capacity Tier which is configurable within a Veeam Scale-Out Backup Repository.

Why is Azure Blob so popular?

Azure Blob can provide many benefits such as,

Off-Site Backup – For on-premises workloads, cloud object storage is located off-site by its very definition, helping organisations to achieve “1” in the 3-2-1 backup rule.

Media Break – Object storage provides a media break as required by “2” in the 3-2-1 rule of backups.

Scalability – A single Blob endpoint that transparently grows or shrinks storage capacity behind it as needed.

High-Availability – Losing a disk, a node, a rack or even a whole datacentre does not impact its availability, with backups and restores continuing to work from the same Blob endpoint as if nothing happened. Be sure to read the redundancy options discussed later in this blog to better understand the different redundancy levels.

No File System – No file system bugs, quirks and limitations for Veeam to deal with. Early adopters of ReFS will be able to relate to backup-breaking bugs found in Windows Server 2016 and 2019.

With the upcoming Veeam Backup & Replication v12 supporting backups directly to object storage, it’s about to get even more popular.

Network Bandwidth

It’s important to consider bandwidth whenever sending backups across a network, especially to Azure Blob. Depending on the backup requirements, there may not be enough bandwidth to meet the required Restore Point Objectives. For calculating bandwidth requirements I’ve used the below with good results.

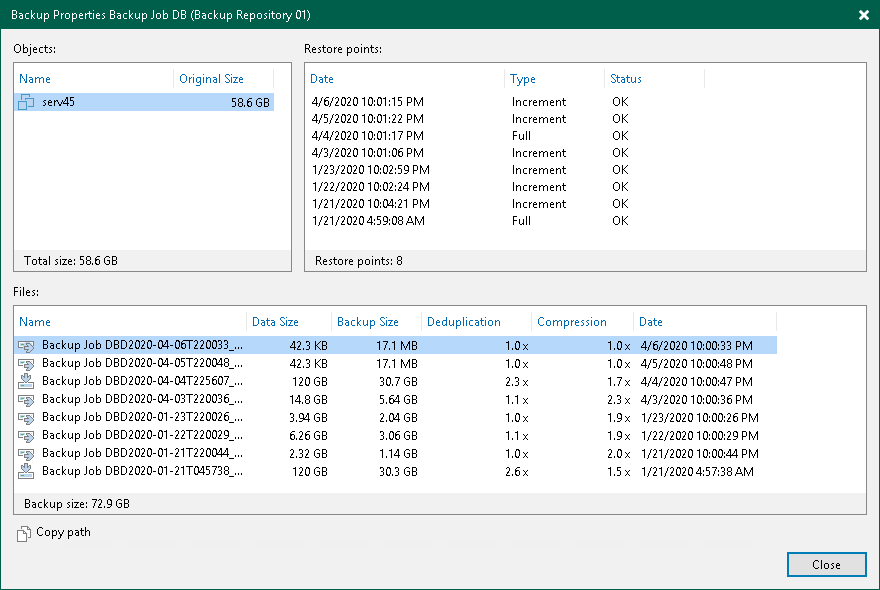

For existing Veeam environments with created backups, it’s possible to view summary information about the backup files. To do so, navigate to the Veeam Backup & Replication console, then select Home on the left-hand navigation pane, choose the backups section, then right-click the backup job to be offloaded to Azure Blob and choose properties.

If there are no existing backup files to review, such as a greenfield site, use the following calculator by Timothy Dewin to “guesstimate” the full and incremental backup sizes. Using the calculator does require a basic understanding of the workloads being protected such as the consumed space, change rate and expected data reduction rates. Note: This calculator has been deprecated but I’ve been unable to find a suitable replacement that details full and individual incremental backup sizes to the same level of detail.

Veeam avoids storing multiple copies of the same data by building and maintaining indexes to verify whether the data that is being sent to Blob is unique and has not been offloaded earlier. So it’s recommended to leave XFS/ReFS enabled in the calculator since the storage savings XFS/ReFS provides are similar to expected object storage consumption.

Once the full backup and incremental backup size have been determined, use the following WAN bandwidth calculator by Timothy Dewin.

| Full | Incremental | |

| Copy Window | Useful for estimating the required time for offloading the first full backup, this is a once-off process. | Use the Restore Point Objective (how often the backup/backup copy job runs) |

| Data Size | The size of the full backup file | The size of the incremental backup file |

| Data Reduction | No further data reduction should be used | No further data reduction should be used |

| Change Rate | 100% change rate should be used | 100% change rate should be used |

The full backup will be larger in size compared to the incremental, hence it will require the most amount of time to complete. The copy window setting in the calculator can be increased until it equals the network bandwidth available to Veeam.

If the offload to BLOB is estimated to take longer than can be tolerated, consider leveraging Azure Data Box. Azure provides a way to transfer the initial backup files to Azure without requiring more bandwidth. It’s possible to use the Data Transfer estimator when creating a storage account available within the Azure Portal to estimate the time required to transfer the initial full backup.

Azure Storage Accounts

Veeam writes backup data into Azure storage accounts, think of these as a pool for all the Azure Storage data objects including blobs, file shares, queues, tables, and disks. Any storage resource that is deployed into a storage account shares the limits that apply to that storage account.

Additionally, each storage account provides a unique namespace for the Azure Storage data that’s accessible from anywhere in the world over HTTP or HTTPS, this is called a public endpoint.

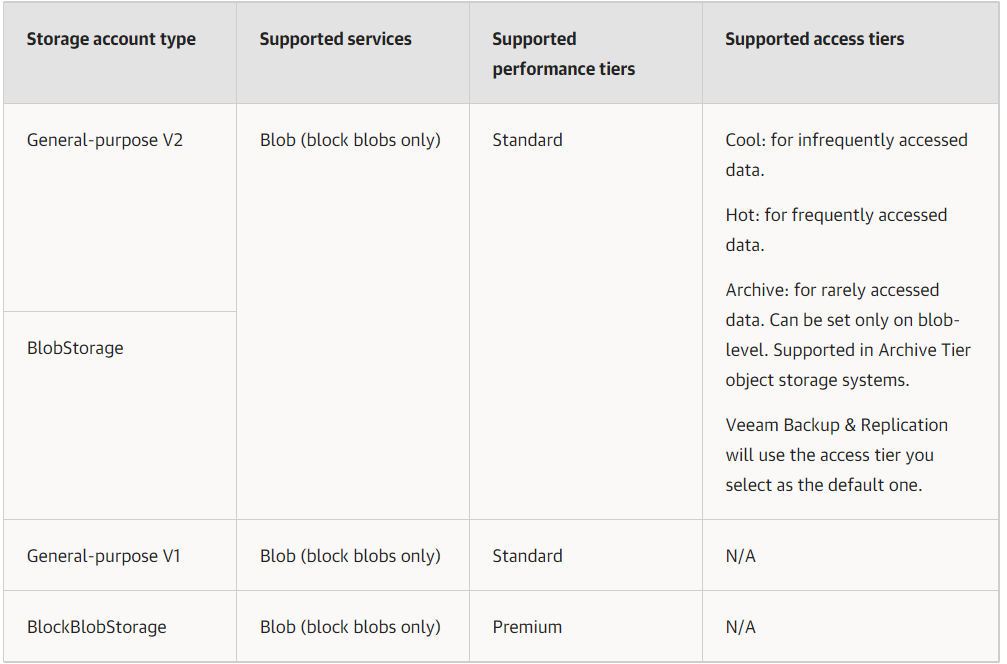

The following types of Azure storage accounts are supported by Veeam

I’ve noted a few common recommendations for Azure storage accounts used by Veeam.

Azure Resource Manager Lock Applying an Azure Resource Manager lock to the storage account to protect the account from accidental or malicious deletion or configuration change is recommended. Though locking a storage account does not prevent data within that account from being deleted. It only prevents the account itself from being deleted.

Private endpoint Leverage private endpoints for the Azure Storage account so Veeam securely accesses the data over a private link. Another recommendation is to configure the storage firewall to limit all connections except for Veeam.

Covering security best practices deserves its own write-up which I’ll leave for another day, in the meantime, there are plenty of great resources online only a quick google away.

You may find recommendations for other use-cases around Azure BLOB which are not supported by Veeam, here are some of the most common that I’ve come across.

Versioning Veeam does not support the Versioning feature for Microsoft Azure object storage. If Veeam is configured to use a Storage Account with blob versioning enabled, keep in mind the extra costs incurred for storing objects that have been removed by the retention policy.

Immutability Veeam does not support Azure object-level immutability yet. Do not enable this feature as this might lead to a significant data loss. This is likely to change in future versions beyond Veeam Backup & Replication v11.

Soft delete Don’t enable ‘Soft delete’ as it’s not supported by Veeam Backup & Replication.

Blob storage lifecycle management Data in the object storage container must be managed solely by Veeam, including retention and data management. Enabling lifecycle rules may result in backup and restore failures.

Pricing

Since Azure Blob utilises pay-per-use, it can be quite daunting to customers who are new to the cloud. Besides the costs of capacity used, there are several other charges to be aware of. Transactions (read and or writes) and egress for data read back to the on-premises environment.

Data access costs Data access charges increase as the tier gets cooler. For data in the Cool and Archive access tier, Azure charges a per-gigabyte data access charge for reads.

Transaction costs A per-transaction charge applies to all tiers and increases as the tier gets cooler.

Geo-replication data transfer costs This charge only applies to accounts with geo-replication configured, including GRS and RA-GRS. Geo-replication data transfer incurs a per-gigabyte charge.

Outbound data transfer costs Outbound data transfers (data that is transferred out of an Azure region) incur billing for bandwidth usage on a per-gigabyte basis.

Tier costs All storage accounts use a pricing model that is based on the blob’s tier. As expected, the cost per gigabyte decreases the cooler the tier becomes. There are some catches though, Azure places minimum data retention periods on the cooler tiers.

It’s recommended to use the Azure Pricing Calculator to perform “what if” analyses.

Pricing has been covered in depth by other authors, so for further reading, I recommend the following articles;

Michael Paul

Part 1: https://www.veeam.com/blog/cloud-object-storage-choosing-provider.html

Part 2: https://www.veeam.com/blog/cloud-object-storage-implementation.html

Part 3: https://www.veeam.com/blog/cloud-object-storage-benchmarks.html

Ed Gummet & Dustin Alberton

Designing and Budgeting for AWS Object Storage with Veeam Cloud Tier

Redundancy

It’s important to consider which level of redundancy is required to meet the backup needs. Azure Storage Accounts offer several different redundancy options which are detailed below.

Locally-redundant storage (LRS): This is the lowest-cost option that provides basic protection against server rack and drive failures. Three copies of the data are stored in a single zone within the selected region. This is recommended for noncritical backups.

Geo-redundant storage (GRS): This can be considered the intermediate option with failover capabilities to a secondary region. Recommended for most backup scenarios.

Zone-redundant storage (ZRS): This provides additional benefits with protection against Datacenter-level failures. Recommended for critical backup that requires high availability.

Geo-zone redundant Storage (ZGRS): Optimal data protection solution that includes the offerings of both GRS and ZRS. Recommended for highly critical backups

Choosing the right level of redundancy is determined by budget and how important the backups are. The more important the backups, the higher level of redundancy is recommended. Obviously, budget is often the limiting factor stopping most organisations from choosing the highest redundancy option.

Further information on Storage Account redundancy can be found in the following link: https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy

Performance Tiers

Azure Storage block blob storage offers two different performance tiers:

Premium: Data is stored on solid-state drives (SSDs) which are optimized for low latency. Premium performance storage is for workloads that require fast and consistent response times.

Standard: Standard performance supports different access tiers to store data in the most cost-effective manner. It’s optimized for high capacity and high throughput on large data sets.

It’s recommended to use the ‘standard’ performance tier for Veeam.

Access Tier

There are three different choices for Access Tiers

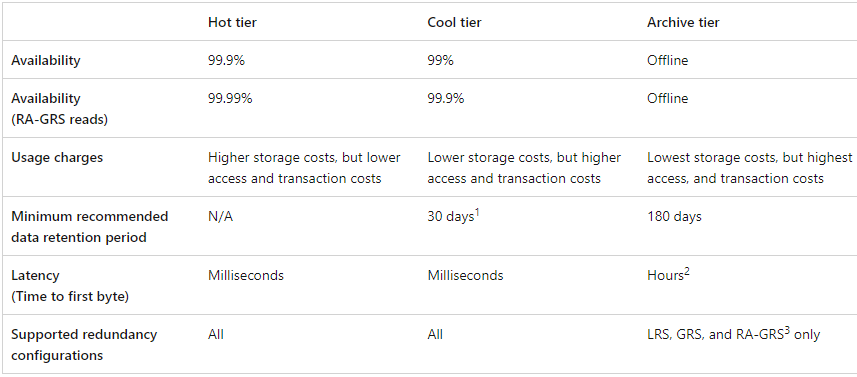

A few key characteristics differentiate them as the Blob tiers get colder.

The storage costs get lower but access and transaction costs increase. Minimum data retention increases from nil for hot tier, to 30 days for cool tier, then to 180 days for archive tier. So for example, if is using cool tier and an object is stored for 20 days and then deleted, the account will be charged as though the object was stored for 30 days. For Azure Blob, data retention is based on when the data was migrated to the tier.

Archive Tier retrieval latency jumps to hours of delays between the requesting of the data and the availability of the data. Note: This is not the same thing as read latency, time to the first byte is the time delay before the data is available to be accessed by Veeam at all.

The cool tier availability is 99%, which is slightly less than the 99.9% available for the hot tier. For Read-only Geo-redundant Storage (RA-GRS), the cool tier availability is 99.9%, while the hot tier is 99.99%

When it comes to performance, a single blob on both the cool tier and hot tier has a rate of 60 MiB per second. Fortunately, while you can only read or write to a single Blob at up to a maximum of 60MiB per second, Veeam doesn’t store all backups in a single blob, more like in millions of blobs. Regarding the total Storage account limit, it is 25Gbps.

One of the biggest considerations when choosing an access tier will be the ongoing charges. Choosing the most cost-effective tier can sometimes be difficult as the obvious choice of selecting the tier with the lowest per-GB storage charge may not always be the cheapest option. Factors such as minimum data retention, data access costs and transaction costs just to name a few all come into play when determining the charges.

Importantly how Veeam has been configured will have a say in which tier is best, specifically in regards to the Capacity Tier ‘Move Policy Window’. If it’s been configured so that only GFS backups are offloaded, a cool tier is often better/cheaper. Remember, the backup/backup copy job retention targeting the SOBR with the capacity tier should be set to at least 30 days or greater to avoid early deletion fees from Azure.

For other Veeam use cases, even though the access and transaction costs are higher, Blob hot tier often works out to be cheaper since the cool tier has higher API costs, so you don’t want too many things moving or changing incurring transaction costs

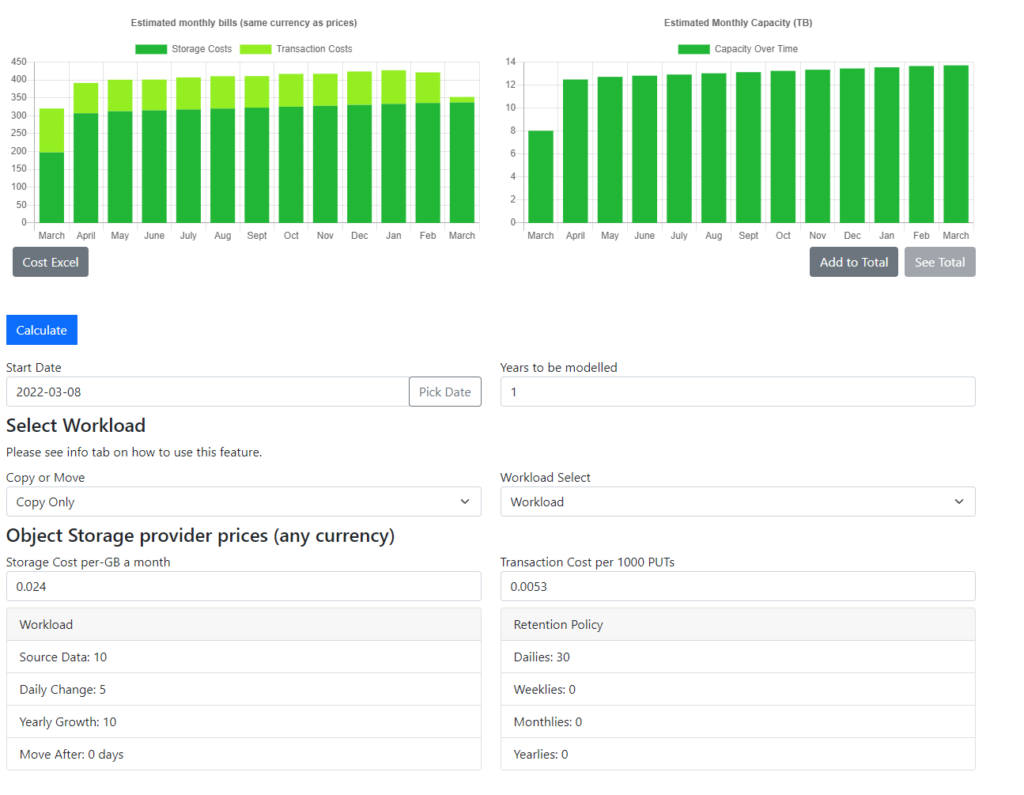

A good tool for comparing the difference between hot and cool tiers is the Veeam Size Estimator Tool (VSE) by EMEA Veeam Solutions Architects. This calculator includes an object storage monthly bills estimate based on the inputted workloads being protected.

Input the ‘Storage Cost per-GB a month’ and the ‘Transaction Cost per 1000 PUTs’ for the region and Blob tier being considered to help estimate the Azure charges.

Network Endpoint

Storage Account blob containers are accessible via the following communication methods:

Public Endpoint – Public Endpoints provide access to the storage account from anywhere across the globe. This means authenticated requests, can originate from inside Azure or anywhere on the internet. This means the storage account is potentially visible to external attack and compromise.

Private endpoints – Private endpoints provide a dedicated IP address to the storage account from within the address space of the virtual network. This enables network tunnelling without needing to open on-premises networks up to all of the IP address ranges owned by the Azure storage clusters.

For customers with a direct on-premises to Azure connection such as an ExpressRoute or Site to Site VPN, I typically see private endpoints created for the storage account and the public endpoint disabled. This method of communications ensures the BLOB container is only accessible over the organisation’s site to communication or end-user VPN client.

thanks alot of information keren banget mantap

Have you ever tried getting Veeam to backup to a storage account over a VPN/Private Endpoint? Doesn’t seem to work for me no matter what I try. And Veeam hasn’t made it any easier by only allowing an account name of the storage account and Shared Key.

Pingback: Veeam Backup target freedom of choice - my cloud-(r)evolution

Pingback: Veeam Azure Object Storage Automation - my cloud-(r)evolution

what a valuable article! Thanks for all your effort

Pingback: Failing over GRS Azure Storage with Veeam | rhyshammond.com